Behind the scenes of Pix4Dmapper

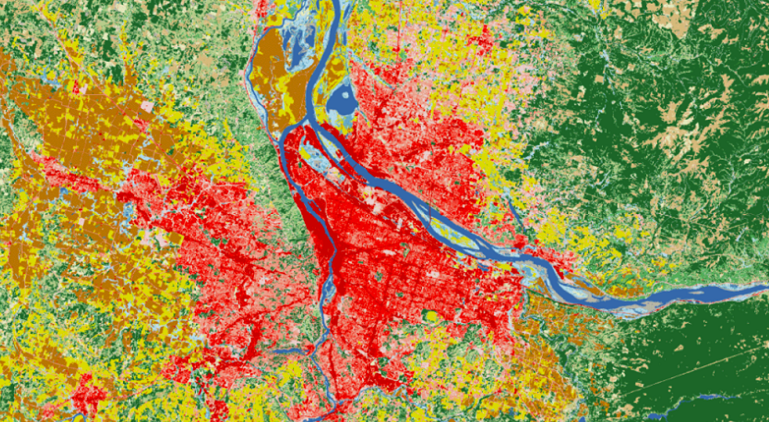

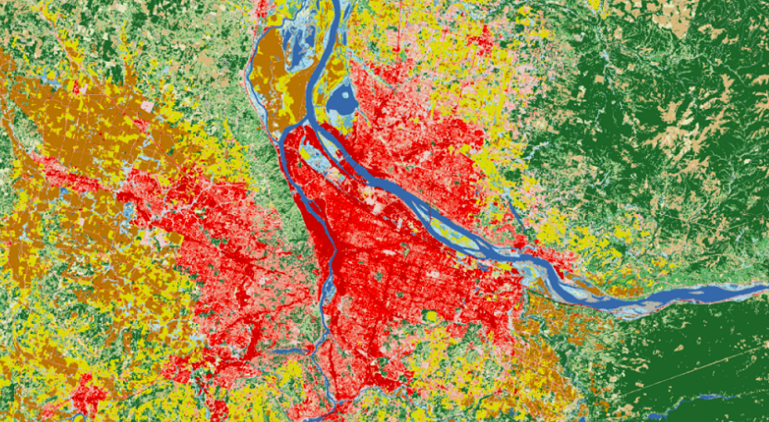

It’s been decades that classification was applied to photogrammetry data, supervised or unsupervised. Most of the traditional techniques relied on 2D or 2.5D results, such as detecting edges on a digital surface model (DSM) or on an orthomosaic generated from satellite imagery for land cover classification.

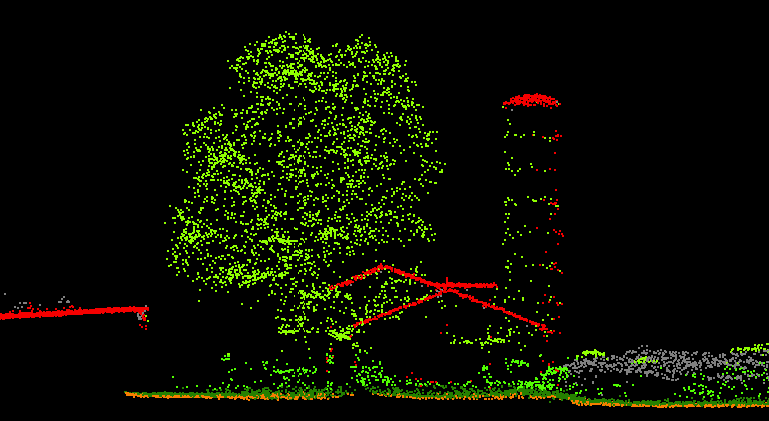

Then came the age of full-waveform LiDAR (Light Detection And Ranging), which produces a point cloud with real 3D information. Due to the accurate distance measurement and the capability of getting continuous returns from each laser pulse, LiDAR generates a precise and clean point cloud which makes 3D classification more reliable.

LiDAR created a lot more applications by categorizing the points into ASPRS-defined classes based on 3D geometry, though a majority of the users targeted only on distinguishing terrain and non-terrain objects.

Evolution: how people used to obtain semantic information

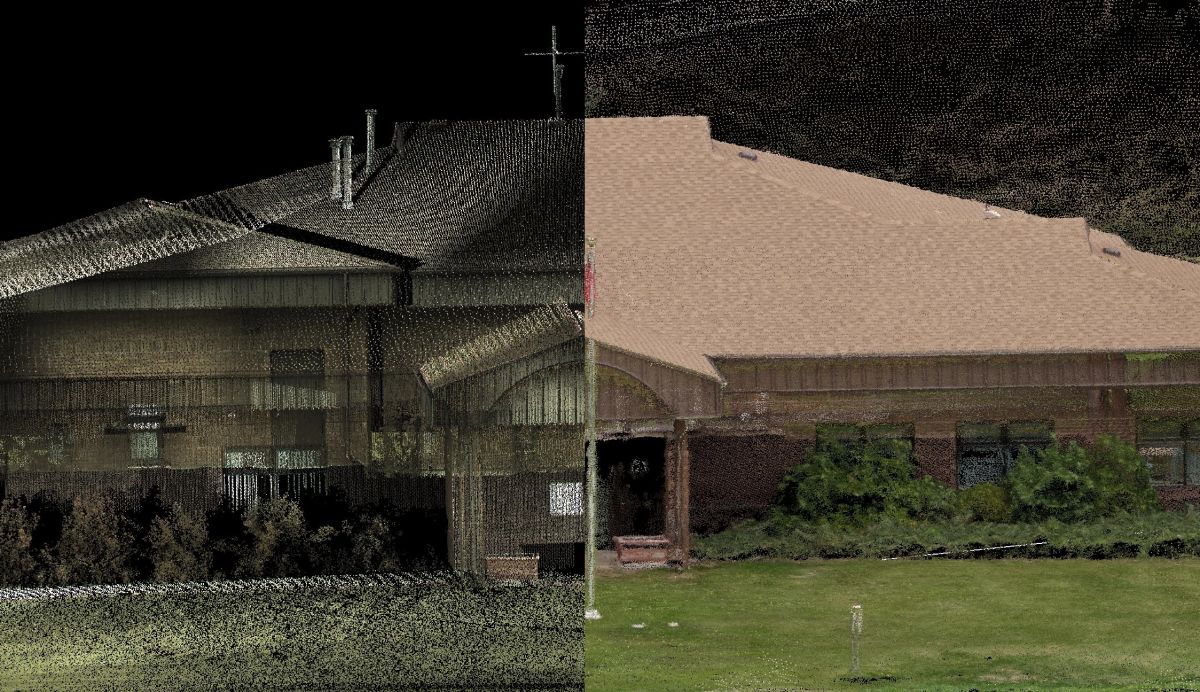

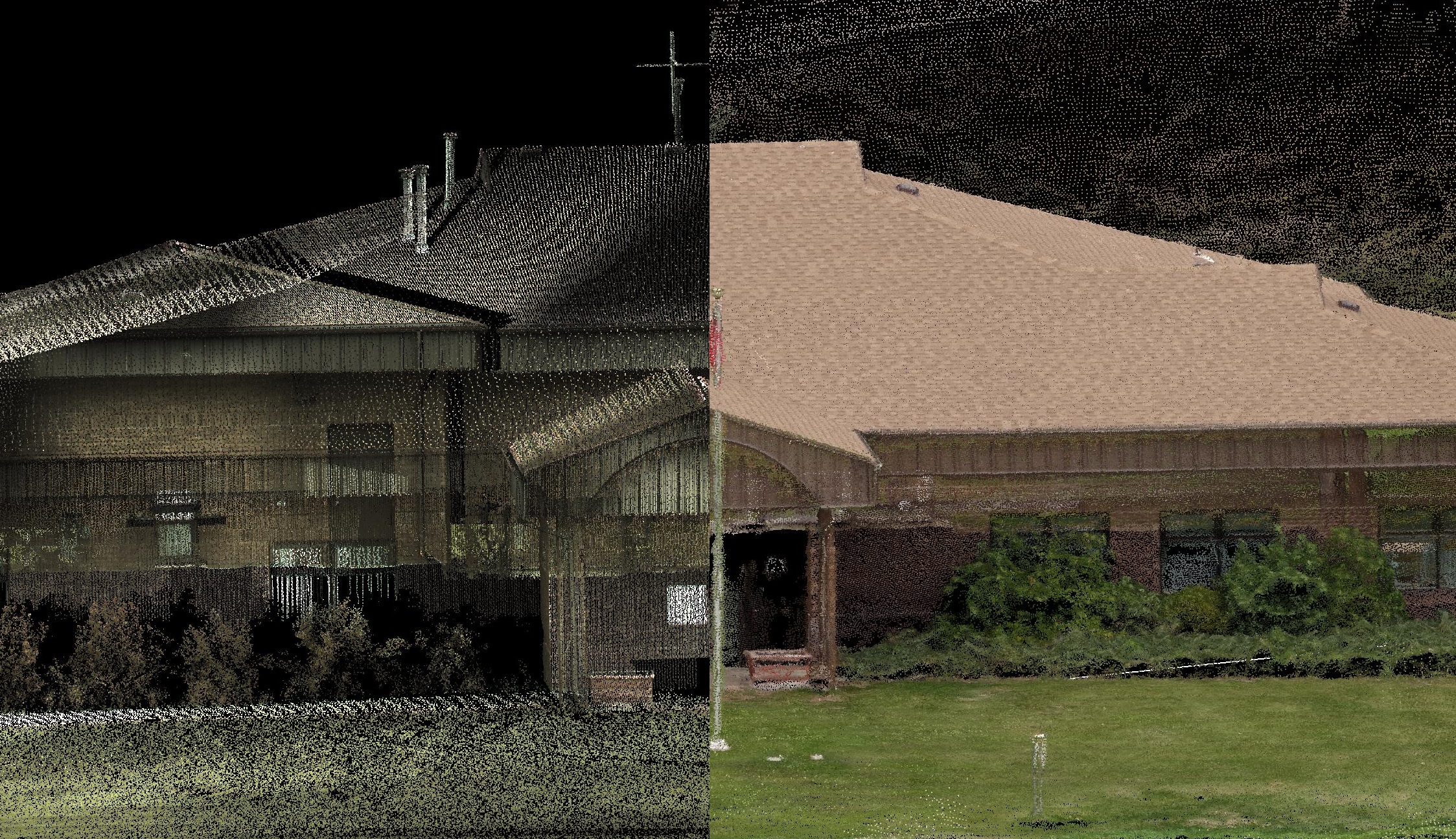

Along with the exponential growth of the drone market in these few years, people started to think about the feasibility of applying similar techniques to drone photogrammetry. However, the first problem, also the biggest, came right afterward: a photogrammetry point cloud tends to be noisier than laser-scanned points, especially after dense-matching.

Unlike active remote sensing methods such as LiDAR or terrestrial laser scans, photogrammetry generates 3D point clouds based purely on image content. This generation leads to artifacts when reconstructing objects with reflective surfaces or uniform textures such as metals or white walls.

A new page for drone-photogrammetry point cloud classification

Knowing the limitation of photogrammetry-generated point clouds, we did some research on various classification methods. We found out that most point classification methods applied to drone photogrammetry still focus on the 3D geometry and require user input for defining area and angles.

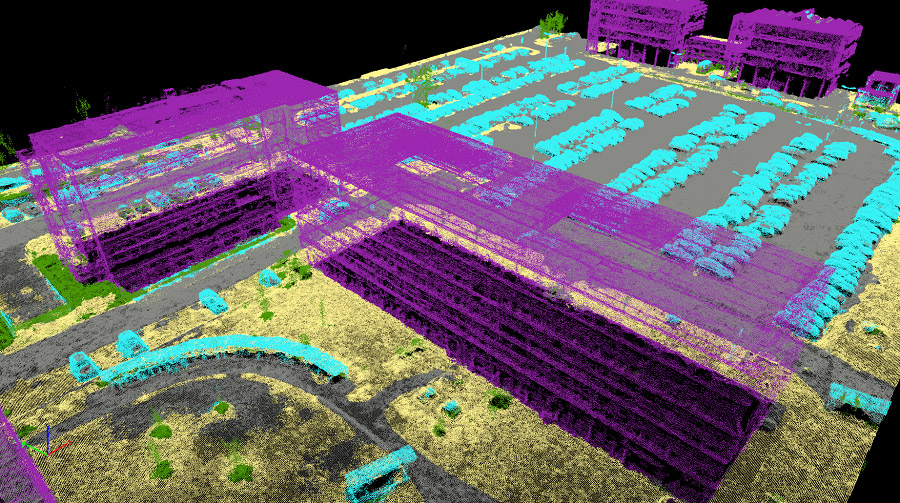

Although including more artifacts, a photogrammetry point cloud has a great advantage of retrieving more information in colors. By also using the RGB values of the 3D points, the classification accuracy has dramatically improved.

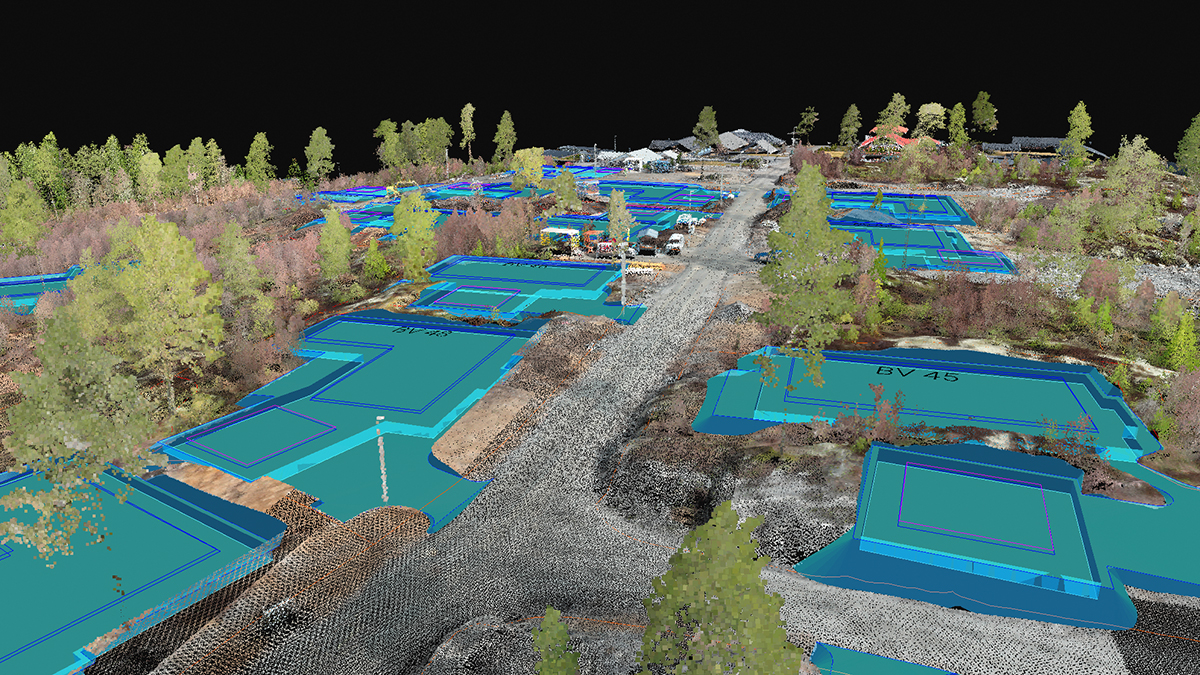

Our approach is to train the classifier with both geometry features in multiple scales and additional color features computed by the reference point and its neighboring points. Then the classifier is able to predict the class of that specific point, such as vegetations, ground or buildings. By manually labeling the classes, the classifier parameters will change to better fit the training datasets.

“We are very happy to see the huge response and early adoption of the point cloud classification from our users. Our next steps are to use more training data and post-process the results to provide cleaner and more reliable results.” – Carlos Becker

“I am glad to be part of the team that developed the feature using machine-learning technology. With the extraction of geometric and color features, we are able to provide a fast classification routine to all Pix4D users.” – Nicolai Häni

Teach the algorithm to learn

Imagine the initial classifier as a newborn baby learning to understand it’s surroundings in a similar way that a human being learns. We need to teach it which are trees and which are buildings, etc. so the program can start learning from instruction and experiences. Currently, the classifier works the best on projects with flat-roofed buildings, scattered high vegetation, ground-level asphalt roads and with a GSD (ground sampling distance) of around 5 centimeters.

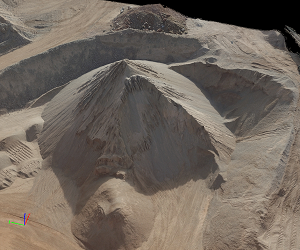

These are the projects that we trained our classifier because a lot of the current users are processing similar projects.You may notice that your tall stockpiles were recognized as buildings and your sharp-roofed buildings were categorized as high vegetations. The best way to solve such issues is to provide human supervision to the classifier.

Want to share your files and help us train the classifier?

Using the machine-learning technology in the software is a breakthrough, but it requires feedback from our users. To have a well-trained, or “smart” classifier, we need to figure out: “What do our users need the classifications for?” The more real cases we get from our users, the better the classifier will perform on practical applications.

Do you always need to remove the vegetations on the stockpiles? Send us the classified point cloud of such projects with the groups correctly labeled, and the classifier will work the best distinguishing the stockpiles and vegetation! Being an inspector and a participant of the machine-learning technology, you don’t necessarily need to code. Share with us your projects, well-classified point clouds or any feedback, you will be amazed to see the huge potentials the technology brings us in the near future!